engineering a context-ful brain

I'm tired of writing essays whenever I want a model to use best-practices in my codebase.

It looks like the new fad is "context engineering." Is this a reaction to Cursor gutting the `@codebase` feature? Probably yes?

I feel this pain often. I’ll ask an AI to extend an existing feature, carefully include four relevant files, and get back something almost correct. The gap is usually filled with context that lives only in my head—understanding the problem, weighing trade-offs, and articulating intent. That’s most of the work, and it’s hard to fully capture in text.

The benefit of a dialogue with an LLM is that we can surface and correct these gaps iteratively. But it’s also exhausting.

I spend a lot of time thinking about that gap. I'm able to offload small tasks, but steering the whole ship still requires my full concentration to make sure that changes align with overarching strategies. I write less code than ever, but I spend far more time preparing for

The promise of automated context engineering tools is twofold:

- Replicate patterns I already consider good practice.

- Help the model understand my intent by extracting semantic information from the structure of my codebase.

That second point is ambitious, but it’s what I really want: to minimize my effort in stitching together context and adding precise details every time I write a prompt. Ideally, the model could receive that context automatically.

Basically: how do I transfer world information to the model in a way that minimizes friction?

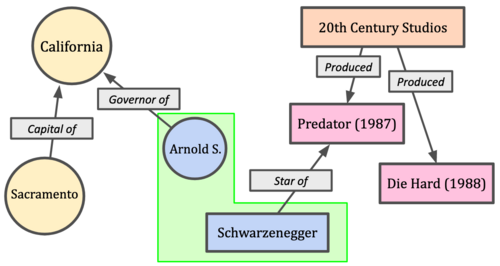

I use Obsidian. I would describe it as a "relational notes" system, where you can define relationships between individual notes. There's plenty of different philosophies around how to do this (e.g. Zettelkasten), but they amount to -> 1) Make small notes and 2) Connect them meaningfully. When done properly, they can produce these gorgeous graphs of knowledge.

In my experience, this looks really cool and takes way too long. Creating a note should be a painless experience. My uneasy minimum has been to just write daily notes and create "well-connected" nodes only when certain concepts show up across multiple days/projects.

This recently prodded me in the direction of building these knowledge networks using LLMs. What if you could write whatever into your notes app, and an LLM would identify concepts, summarize, and define relationships between them? What are the benefits there?

For one, your world knowledge is immediately transferred. Domain knowledge, interests, focus - even your TODO list could yield insights into the best way to design a feature. You could weight data by recency. This knowledge graph is auditable in plain text.

This looks a lot like GraphRAG (paper). Can we have a GraphRAG for every person/codebase/project? Looks like we're just missing a tooling layer.

Sometimes, I embark on a project where I need guidance. For example, I've recently been writing a lot with React. To me, React is effectively a new language - with tradeoffs and patterns that I don't have the experience to be opinionated about.

I often find myself (desperately) tacking on something like

> Use best-practices. Consider common patterns used to build similar systems, which ensure the code is maintainable, modular and extensible.

The problem is that these models are trained on a huge corpus of code examples - some of them good; some of them bad. By throwing in these tokens "best practices", "maintainable", etc. - I'm hoping to light up some good programmer patterns somewhere in the model's weights.

This kinda sucks.

Here, I am looking for a model to have context beyond both of us. I need structured references from someone who really knows what they're doing in this particular thing.

I wonder if I create an Obsidian notebook all about building sane ML backend systems - if a React developer might trade me theirs about building sane React webapps? Can we take an structured imprint of an expert's brain, to induce a model to write smarter code?

At this point, you might be wondering whether we can make a gigantic database with only really good code which we can then use to pull examples. Implicit in this is this expectation that the model can extract some semantic sense of what "good code/system design" is, from effectively raw data.

There's another caveat with RAG systems too, which is that embedding models have some opaqueness to relationships between documents.

The future here could be "less is more."

A relatively small, highly-structured database with well-articulated relationships seems like a sensible step in the right directions. I could write a query like "componentize my React app" and we could retrieve data according to cosine similarity AND pagerank.

People often gripe that companies only want senior developers to supervise AI agents that act like "overeager juniors". There's some truth to that.

> ❌ "Build a web scraper

> ✅ "Build an async web scraper using BeautifulSoup that extracts product data from e-commerce sites, handles rate limiting, and stores results in PostgreSQL"

^ This isn't general intelligence. It's just moving around the hard part.

As a user of AI agents, I'd love if my agents a) understood the scope and tooling available in my project and b) made informed & scalable choices how to implement the solution.

There's two products that are built into this idea. The first is a tool which automatically structures your notes. The second is the ability to build distill wisdom around certain tools and practices.

With an AI notes-organizer, friction is key. I'd want to import anything - code, documentation, text/audio notes, recorded meetings, pictures of whiteboard ERD sessions, etc.

I'd want some spatiotemporal resolution. Things change. Six months on, if I forget some arcane knowledge about how a feature was designed, I'd like to be able to easily find out.

"Collectivized" brains could already a B2B product.

- Show me a map of all our microservices.

- List all the services which require the most maintenance.

- Show me services which tend to be bottlenecks.

- Show features that are partially implemented but incomplete.

- Based on previous projects, estimate the steps and time required to complete the following task: ...

Mostly, I love that we could carve off a piece of a world expert's wisdom on a thing. Think Karpathy's cookbook for training neural networks, but interactive and can provide consulting help to the project you're currently working on.

This could easily be a marketplace - just like courses are today.

One common critique of the vibe-coding phenomenon is that there is a level of carelessness applied to certain critical systems - such as auth. Privacy and security are the responsibility of builders, but it shouldn't be the bottleneck to creating a new product. We've lowered the barrier to entry for so many creative endeavors - but we're missing model grounding in specific domains of expertise.

I've been musing on this for a little while now. Over Christmas, I busted out a POC for "AI-assisted prompt engineering." The pitch is that you could write any natural language in Cursor and slap a `@promptify` to automatically add-in the ideal instructions for the use-case. Users can upload prompts they find useful, and the API automatically chooses prompts that match your needs with the best outcome.

I figure a POC of this shouldn't be too hard. One thing at a time, though...